Although the actions of pilots historically have been responsible for the majority of aircraft accidents,1 most do not arise from any mental disorder. In fact, less than 0.3 percent of 2,758 fatal civil aircraft accidents in the United States during a recent 10-year period were caused by suicide, and all involved general aviation (GA) flights, not commercial airline operations.2 Instead, most accidents are caused by inadvertent errors made by flight crewmembers — errors that arise from normal physiological and psychological limitations inherent in the human condition. This article examines the role these limitations play in piloting performance and suggests strategies to help pilots minimize their influence on the flight deck.

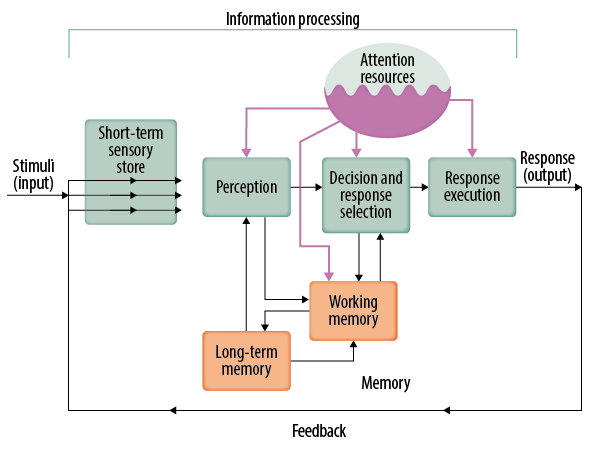

Understanding human error follows from understanding human thinking, which is primarily the domain of cognitive psychology. Using the computer as a metaphor, this approach to the study of human thought and behavior postulates that inputs (stimuli, or other information) from the environment are received by the senses and are processed before a response (output) is made. The model assumes that information processing takes time as inputs travel from left to right, but also rejects the purely behaviorist notion that humans are merely passive responders to external stimuli; rather, people actively search for information, often processing from right to left.

A more elaborate model, developed by one of the world’s foremost researchers in the field of aviation human factors, Christopher Wickens, is found in Figure 1. Information processing begins with stimulation of sensory receptors in the eyes, ears, skin, vestibular apparatus, etc. (Table 1).3 It is generally agreed that some sort of attentional mechanism filters these sensations (inputs), often limiting the type and amount of information that is perceived, or interpreted, by the brain. These perceptions are influenced by previous experience (long-term memory) and current inputs (short-term memory, or working memory). Higher level cognitive functioning, such as decision making and problem solving, is also memory-dependent and influenced by the accuracy of one’s perception of the situation, or “situational awareness.” Finally, the model recognizes that the human brain, like a computer, has limited processing capacity, and if overloaded with inputs (task saturation), the attentional resources allocated to the various mental operations needed to effectively perform a task will significantly diminish.

Figure 1 — Information Processing Model

Source: U.S. Federal Aviation Administration (FAA). “Wickens’ Model.” FAA Human Factors Awareness Course.

| Human Sensation | Description Specialized senses detect specific types of stimuli and are important for pilots to obtain accurate information about their external world. Some rank higher when it comes to flying: Vision and hearing are crucial for flight while gustation (sense of taste) usually is not. |

|---|---|

| Source: Dale Wilson | |

| Vision | Sense of sight. Photoreceptors (rods and cones) in the retina of each eye are stimulated by light, causing a chemical reaction that converts light energy into neural signals that are sent to the visual cortex via the optic nerve. |

| Audition | Sense of hearing. Hair-like receptors in the organ of Corti, located in the cochlea of the inner ear, are stimulated by sound waves and create neural impulses that are sent to the auditory cortex via the auditory nerve. |

| Cutaneous | Sense of touch. Different types of receptors in the skin specifically sense pressure, temperature or pain. |

| Olfaction | Sense of smell. Olfactory receptors located in the nasal cavity chemically react to a variety of odors. Specific receptors detect specific smells. |

| Vestibular | Sense of balance. Receptors in the cupula, located in the semicircular canals in the inner ear, move in response to angular acceleration while the membrane located in the adjacent otolith bodies moves in response to linear acceleration. |

| Somatosensory | Sense of overall position and movement of body parts in relation to each other. Receptors in the body’s skin, muscles and joints respond to gravitational acceleration, providing sense of body position and movement. Sometimes called the kinesthetic or postural sensation, it is more commonly referred to as the “seat-of-the-pants” sensation because accelerations experienced in flight can be confused with gravity. |

Through experiments, cognitive psychologists often study these processes in isolation but recognize that they are interdependent. For example, a distraction (e.g., a wing flap anomaly) may lead to a breakdown in voluntary attention, causing a pilot to focus too much on the flap indication, which, in turn, could lead to forgetting to accomplish a checklist item such as extending the landing gear before landing. Also, research shows that any distortions upstream in the model (e.g., sensory processing and/or perception) will adversely affect downstream cognitive functions such as decision making and response selection and execution (Table 2).

Notes

Source: Dale Wilson |

| Perception Errors |

| Visual Perception

In November 2013, a Boeing 747 Dreamlifter, destined for McConnell Air Force Base in Wichita, Kansas, U.S., unintentionally landed on a 6,100-ft (1,859-m) runway at Col. James Jabara Airport about 8 nm (15 km) north of McConnell. Less than two months later, a Southwest Airlines Boeing 737, on approach to Branson Airport in Missouri, mistakenly landed on a 3,738-ft (1,139-m) runway at M. Graham Clark Downtown Airport, about 5 nm (9 km) north of Branson. The crews of both aircraft were conducting visual approaches at night in visual meteorological conditions (VMC) and thought they saw the correct airport and runway.1 Auditory Perception In February 1989, a Boeing 747 owned by FedEx and operated by Flying Tigers crashed into a hillside short of Runway 33 at Kuala Lumpur, Malaysia, killing all four occupants. While the 747 was on a nondirectional beacon (NDB) approach, air traffic control provided the following clearance “descend two four zero zero” (2,400 ft), which the crew misinterpreted as “descend to four zero zero” (400 ft).2 |

| Attention Errors |

| All four occupants were killed after a Eurocopter AS350 helicopter crashed in August 2011, following an engine failure due to fuel exhaustion near the Midwest National Air Center, in Mosby, Missouri, U.S. The investigation determined that the pilot missed three opportunities to detect the low fuel status, in part because he was frequently texting on his cell phone, a “a self-induced distraction,” according to the U.S. National Transportation Safety Board (NTSB) report, “that took his attention away from his primary responsibility to ensure safe flight operations.”3 |

| Memory Failures |

| A pilot of a Hughes 369 was killed after loss of control of the helicopter, which struck the water west of the Solomon Islands in December 2008 while attempting to lift off from a fishing vessel. The helicopter mechanic, who witnessed the event, saw the pilot trying to remove the tail rotor pedal lock in flight (unsuccessfully). He had forgotten to remove the lock before takeoff.4

All three passengers were killed in September 2003 when a Cessna 206 struck terrain in Greenville, Maine, U.S., and came to rest inverted after the pilot failed to select the fuel selector in the proper position, resulting in fuel starvation during the initial climb.5 |

| Decision Errors |

| Shortly after takeoff from Springfield, Illinois, U.S., in October 1983, Air Illinois Flight 701 lost electrical power from the left generator. The first officer erroneously shut down the right generator and was unable to get it back on line. All 10 occupants were killed after the crew lost control of the Hawker Siddley 748 while approaching their destination of Southern Illinois Airport in night instrument meteorological conditions. The probable cause of the accident was the “captain’s decision to continue the flight toward the more distant destination airport after the loss of DC [direct current] electrical power from both airplane generators instead of returning to the nearby departure airport,” the NTSB said.6

A Eurocopter AS350 helicopter, operated by the Alaska Department of Public Safety under visual flight rules at night, struck terrain while maneuvering during a search and rescue flight near Talkeetna, Alaska, U.S., in March 2013, killing all three occupants. The pilot, who was not current for instrument flying, became disoriented after flying into instrument meteorological conditions. The NTSB said the probable cause of this accident was “the pilot’s decision to continue flight under visual flight rules into deteriorating weather conditions.”7 |

Lessons learned from aircraft accident investigations reveal how otherwise normal everyday human cognitive limitations in perception, attention, memory and decision making play a causal role. The following examples highlight some of these limitations.

Perceptual Errors

In clear flying weather, flight crews have missed or misperceived visual cues necessary to avoid a midair collision (MAC) in the day and controlled flight into terrain (CFIT) at night. They also have failed to detect or correctly perceive vital auditory cues in air traffic control communications. For example, numerous incidents (and some accidents) have resulted from call sign confusion, which occurs when controllers issue, and/or pilots respond to, a clearance intended for another aircraft with a similar call sign.

Attention Failures

Misperceptions are often the result of breakdowns in selective attention. Pilots attend to the wrong stimulus while failing to pay attention to the correct one. CFIT, loss of control and other fatal accidents have occurred because pilots were preoccupied with nonessential tasks, or were saturated with tasks and their diminished attentional resources were unable to cope with all of them.

Forgetting

Successful flight performance is memory- dependent, but pilots sometimes forget past information that they have learned (retrospective memory failure) or forget to execute an important task in the future (prospective memory failure). Examples of the latter include forgetting to extend the landing gear before landing, an event so common that a cynical adage has developed among aviators that says, “There are only two kinds of pilots — those who have landed with the gear up and those who will.” In addition, fatal commercial airline accidents have occurred because flight crews have forgotten — whether due to distraction, task overload or both — to properly configure the aircraft for takeoff.

Decision Making

One of the most complex of mental operations is decision making. Any deficiencies in sensory processing, perception, attention or memory can lead to poor decisions, but decision making comes with its own limitations. For example, biases are often evident in the decision making of GA pilots, and some commercial pilots, who attempt visual flight rules (VFR) flight into instrument meteorological conditions (IMC). Compared with a fatal U.S. GA accident rate of less than 18 percent, some 95 percent of U.S. GA VFR-into-IMC accidents in 2012 resulted in fatalities.4 Empirical studies and accident investigations indicate that decision biases — such as invulnerability, optimism bias (the tendency to be overly optimistic in envisioning the likely outcome of a decision), and ability bias (a bias that favors the able-bodied and highly skilled), overconfidence and escalation bias (leading to entrapment by acceptance of increasing risks) — have had a part in pilots’ decisions to continue VFR flight into IMC.5

Several conclusions and recommendations can be made from an understanding of human cognitive performance and its role in aircraft accidents.

First, there are specific flight situations in which particular limitations pose a greater risk. For example, conducting a visual landing approach in dark-night conditions in clear weather increases the likelihood of a pilot experiencing a perceptual illusion (e.g., black-hole effect, a threat present on dark nights when there are no ground lights between an aircraft and the runway threshold) with its risk of a CFIT accident short of the runway. Distraction-induced attention and memory failures are also more likely, and more consequential, during certain phases of flight. Flight crews should, therefore, seek to fully understand the exact nature of these limitations and the context in which they are likely to manifest themselves on the flight deck.

In addition, there are effective strategies that pilots can use to overcome these cognitive limitations. For example, despite the inherent limitations of the “see-and-avoid” method in detecting traffic in flight, best practice strategies exist to overcome them: Pilots can utilize an effective scanning technique; increase aircraft conspicuity (e.g., turn on landing lights); ensure the transponder is correctly set; use party-line radio information to increase situational awareness; and comply with a variety of rules designed to reduce the chance of an MAC (such as maintaining a sterile flight deck). Similarly, there are practices and procedures designed to help improve attention and memory while carrying out the tasks involved in piloting an aircraft: Pilots can use effective monitoring strategies, comply with standard operating procedures (SOPs) and employ strategies to effectively manage mental workload and distractions.

Also, airlines can assist pilots by providing training in formal risk- and error-management behaviors. While flight crews undergo extensive training to avoid committing errors on the flight deck, most airlines acknowledge that pilots are subject to the cognitive limitations that all humans share, and that despite their best efforts to avoid them, errors made by pilots are inevitable. Rather than pretend these errors don’t exist, or punish pilots when the errors occur, flight departments can arm pilots with tools they need to effectively manage error on the flight deck.

Threat and Error Management

One such approach to reducing risk in aviation operations is threat and error management (TEM). Used primarily by trained observers as an observation tool during line operations safety audits (LOSA),6 flight departments can leverage this framework as a training tool to assist crews in effectively managing risks on the flight deck — including those arising from limitations in human performance. As its name implies, TEM is based on the assumption that threats to safe flight are always present and errors made by pilots are unavoidable.

TEM involves using countermeasures to anticipate, detect, avoid and/or mitigate the threats pilots may face, and to detect, capture and correct the errors they do make. An error is an improper action (or lack of action) committed by the flight crew (e.g., manual flying errors, monitoring errors and improper use of checklists), while a threat is generally a condition or event outside their influence (e.g., systems malfunction, adverse weather, distractions from others). Either can lead to an undesired aircraft state — such as an improperly configured aircraft, an unstabilized approach or an undesired trajectory toward higher terrain — which, if not properly managed, could lead to an accident.

Countermeasures are behaviors, procedures or devices designed to avoid or mitigate threats and errors. Whether formally identified as TEM countermeasures or not, airline safety systems have evolved to include best practice countermeasures designed to effectively manage risk on the flight deck. These behaviors are designed to overcome the inherent limitations, as noted, in perception, attention, memory and other cognitive processes, and include:

- Adhering to SOPs;

- Complying with sterile flight deck rules;

- Managing workload and distraction;

- Monitoring/cross-checking;

- Habitually using checklists;

- Using verbal callouts;

- Conducting stabilized approaches; and,

- Managing automation.

These are generic one-size-fits-all strategies designed to counter a variety of unforeseen threats and errors. However, flight crews must also use targeted countermeasures to guard against specific threats and/or errors. For example, domain-specific knowledge and behaviors are vital when attempting to manage the risks associated with flying near thunderstorms, at high altitudes or at night. Similarly, threat-specific countermeasures are needed to avoid an MAC, a runway incursion or encounters with airframe icing.

Ancient Roman philosopher Seneca the Younger observed that “to err is human.” But he also noted that “to persist [with the error] is of the devil.” A successful approach to managing risk on the flight deck acknowledges the former but uses effective best practice strategies to avoid the latter.

Dale Wilson is a professor in the Aviation Department at Central Washington University in Ellensburg, Washington, U.S. He teaches courses in flight crew physiology, psychology and risk management, and writes about flight crew safety matters, including co-authoring the recent book, Managing Risk: Best Practices for Pilots.

Notes

- Wiegmann, Douglas; Shappell, Scott. U.S. Federal Aviation Administration (FAA) Report DOT/FAA/AM-01/3, A Human Error Analysis of Commercial Aviation Accidents Using the Human Factors Analysis and Classification System (HFACS). February 2001. Available at <www.faa.gov/data_research/research/med_humanfacs/oamtechreports/2000s/2001/>.

- Depending on the sector being described, flight crew error has been implicated in 70 to 80 percent of civilian and military aircraft accidents.

- Lewis, Russell J.; Forster, Estrella M.; Whinnery, James E.; Webster, Nicholas L. FAA Report DOT/FAA/AM-14/2, Aircraft-Assisted Pilot Suicides in the United States, 2003-2012. February 2014. Available at <www.faa.gov/data_research/research/med_humanfacs/oamtechreports/2010s/2014/>.

- Experts believe that humans possess more than the five basic senses. For example, within the broad category of touch, there are separate types of sensory receptors for pressure, temperature and pain.

- Aircraft Owners and Pilots Association Air Safety Institute. 24th Joseph T. Nall Report: General Aviation Accidents in 2012. 2015.

- Wilson, Dale; Binnema, Gerald. “Pushing Weather” (Chapter 4). Managing Risk: Best Practices for Pilots. ASA, Newcastle, Washington, U.S. 2014.

- FAA. Advisory Circular AC 120-90, Line Operations Safety Audits. April 27, 2006.

Image credit: © DrAfter123 | iStockphoto