Maintenance human factors sometimes is discussed as if the concept is radically new. Three generations ago, in the late 1940s, it was recognized that it was a designer’s obligation to eliminate the potential for misassembly in his or her designs. ”If anything can go wrong, it will” — the so-called Murphy’s Law — is not a pessimistic statement but a long-established design law. It is said to have been devised after the misassembly of instrumentation on a rocket sled being used for aeromedical tests on a California lake bed.1

Two generations ago, in 1964, the U.K. Accidents Investigation Branch (now the U.K. Air Accidents Investigation Branch [AAIB]) commented that “faulty maintenance” was rare, but expressed concern over designs that did not consider Murphy’s Law and could allow misassembly.2

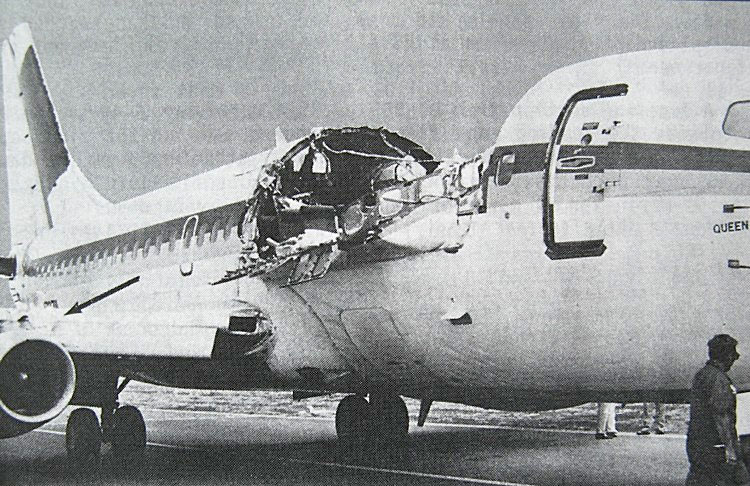

One generation ago, at the start of the 1990s, attention increasingly moved from designers to maintainers and a wider range of possible maintenance failures and associated human factors. Although maintenance-related accidents remained relatively rare, a cluster grabbed an expanding industry’s attention. The 1988 Aloha Airlines Boeing 7373 and 1989 United Airlines McDonnell Douglas DC-104 accidents highlighted concerns about inspection standards and human factors. The 1990 British Airways British Aircraft Corp. BAC 1-115 and 1991 Continental Express Embraer EMB-1206 accidents highlighted inadvertent misidentification of parts and incomplete maintenance. Thinking was also influenced by popular books, such as those by James Reason, published in 19917 and 1997,8 and other research that described a wider range of maintenance errors in a more sophisticated way.

In 1998, the European Joint Aviation Authorities launched a working group to address maintenance errors, which led to major human factors–focused changes in the regulations on aircraft maintenance in 2003.9 While several changes were made, the two main elements were human factors training “for all personnel involved in maintenance” and enhanced occurrence reporting. Both were sensible, well-intentioned initiatives.

Unintended Consequences

However, the implementation of these regulations with their focus on training and reporting, may have had unintended consequences and failed to deliver the benefits expected in the years since.

“Corporations are victims of the great training robbery,” said a recent paper.10 Despite spending $356 billion globally in 2015 on training of all types, “they are not getting a good return on their investment.” Earlier research noted that, in relation to “change programs” generally, “well-trained and motivated employees could not apply their new knowledge and skills when they returned to their units, which were entrenched in established ways of doing things. …. Individuals had less power to change the system surrounding them than that system had to shape them.”11

Maintenance human factors training, if done only for compliance and without proper organizational support to facilitate change, will invariably suffer the same fate.

The new knowledge and skills gained in one- to three-day human factors courses are also limited, even before accounting for perhaps 50 percent ”scrap learning,” which is learning never subsequently applied in practice. Unlike crew resource management (CRM) training for aircrew, specific maintenance human factors skills are not honed offline in the equivalent of regular simulator sessions. Training, however, remains an addictive “solution,” at least to lazy managers and unscrupulous training providers.

Occurrence reporting naturally encompassed not only reporting incidents but also near misses, errors and the factors that provoke them. Most organizations implemented bureaucratic reporting mechanisms to capture and analyze reports. They also trained investigators, often focusing on interviewing skills. While such skills are essential and need careful development, such training could lead to a perception that the individual is the subject of the investigation. There also tended to be less emphasis on developing investigators to actively find and facilitate solutions.

It was also typical, and sensible, to simultaneously adopt a just culture policy. These policies are often accompanied by models, typically flowcharts, to illustrate the spectrum of behaviors from simple errors to deliberate sabotage. While useful educationally, sometimes these have been misused as decision aids for routinely determining culpability, treating front-line personnel as culpable until proven otherwise. Without mutual trust, learning and improvement suffers.

In comparison, less attention was often paid to techniques for identifying and anticipating underlying systemic problems.

While these human factors initiatives were intended to help front-line personnel, the implementation of some of them gave the impression of perfunctory mass training, while companies lazily relied on front-line personnel to report problems in lieu of adopting proactive safety improvement.

So What Should We Do?

David King, former chief inspector of the U.K. AAIB, made the point at a May 2015 Royal Aeronautical Society conference that after a generation of focus on human factors, similar human factors errors are still occurring.12 He challenged the audience to find a next-generation approach, rather than just doing more of the same.

The focus needs to shift from seeing maintainers as generators of errors to seeing them as collaborators in enhancing maintenance performance. A good analogy is to treat them like championship athletes aiming to win rather than simply “not to lose.”13

The focus needs to shift from seeing maintainers as generators of errors to seeing them as collaborators in enhancing maintenance performance. A good analogy is to treat them like championship athletes aiming to win rather than simply “not to lose.”13

How do we achieve a more inclusive, trust-building and proactive next-generation approach to genuinely enhance front-line human performance?

One thing the industry must do is move from blindly pushing classroom training to enabling holistic individual and organizational learning. There is a view that only 10 percent of learning comes from formal courses, 20 percent from informal learning (e.g., personal research, discussion with colleagues and mentoring) and 70 percent comes while doing the job.

Exploiting this 70:20:10 concept14 requires a greater emphasis on workplace coaching and the leadership skills of supervisors.15 We need to become better at observing to make “marginal gains,” small but multiple performance improvements rather than just compliance. These, in turn, will increase mindfulness, open dialogue, trust, learning and systemic improvement, rather than reliance on passive reporting systems and a focus on culpability determination.

Increasing use of safety management systems should help but could reinforce bad habits (if, for example, their implementation just emphasizes a tidal wave of compliance-centric mass classroom training and quantitative data-gathering at the expense of qualitative knowledge and experience).

We also need designers to return to what we knew 70 years ago and strive for a design approach that delivers more human-centered designs, resistant to error.16 For example, certification requirements for the human-centered design of flying controls to minimize or prevent assembly error, are unchanged since 1964,17 warnings in manuals are still allowed in place of the use of fail-safe methods to prevent defects, ambiguous technical publications remain a threat, and designers often have unrealistic expectations of the perfection of others.18

Andy Evans is an aviation safety and airworthiness consultant at Aerossurance, an Aberdeen, Scotland-based aviation consultancy. He also is a member of Flight Safety Foundation’s European Advisory Committee.

Notes

- http://aerossurance.com/safety-management/hf-murphys-or-holts-law/

- Newton, E. “The Investigation of Air Accidents.” Journal of the Royal Aeronautical Society Volume 68 (1964): 156-164

- http://www.ntsb.gov/investigations/accidentreports/pages/AAR8903.aspx

- http://www.ntsb.gov/investigations/AccidentReports/Pages/AAR9006.aspx

- https://www.gov.uk/aaib-reports/1-1992-bac-one-eleven-g-bjrt-10-june-1990

- http://www.ntsb.gov/investigations/AccidentReports/Pages/AAR9204.aspx

- Reason, James. Human Error. Cambridge, United Kingdom: Cambridge University Press, 1991.

- Reason, James. Managing the Risks of Organizational Accidents. Aldershot, United Kingdom: Ashgate, 1997.

- JAR-145 Approved Maintenance Organisations, Amendment 5 (1 Jan 2003)

- Beer, M., et al. “Why Leadership Training Fails — and What to Do About It.” Harvard Business Review (October 2016): 50–57

- Eisenstat, R. et al. “Why Change Programs Don’t Produce Change.” Harvard Business Review (November–December 1990): 158-166

- http://aerossurance.com/safety-management/maintenance-hf-next-generation/

- http://aerossurance.com/safety-management/maintenance-going-for-gold/

- http://aerossurance.com/safety-management/70-20-10_learning/

- Evans, A. and Parker, J. “Beyond Safety Management Systems.” AeroSafety World Volume 3 (May 2008): 12-17.

- http://aerossurance.com/helicopters/first-11-design-maintenance-hf/

- http://aerossurance.com/safety-management/pa46-misrigged-flying-controls/

- http://aerossurance.com/safety-management/aaib-boeing-not-adequate/

Featured image and vignette: © shaineast | Vector Stock